STX B+ Tree Measuring Memory Usage with malloc_count

Posted on 2013-05-05 09:44 by Timo Bingmann at Permlink with 2 Comments. Tags: #stx-btree #frontpage

Within the next few days, a new version of my popular STX B+ Tree library will be released. In light of this imminent release, I created a memory profile with my malloc_count tool, comparing the requirements of four different C++ maps with integer keys and values.

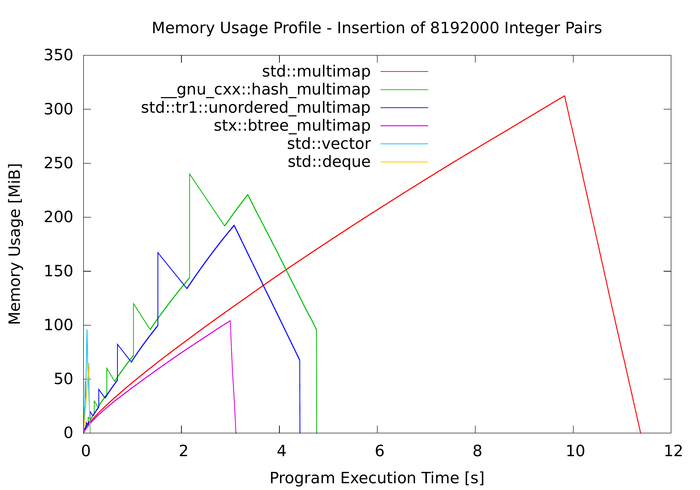

The test is really simple: create a map container, insert 8 Mi random integer key/value pairs, and destruct it. The memory profile shows the amount of memory over time as allocated via malloc() or new. The test encompasses the usual gcc STL's map which is a red-black tree, the older hash_map from gcc's STL extensions, the newer gcc C++ tr1::unordered_map, and of course the stx::btree_map with default configuration. As a reference, I also added the usual STL vector and deque (not map containers), to verify the plotting facilities.

To isolate heap fragmentation, the profiler fork()s separate process contexts before each run. To avoid problems with multiple equal random keys, the multimap variant of all containers is used. Here is the memory profile (also included in the STX B+ Tree tarball):

First notice std::vector's memory usage on the very left: as expected it is very fast as it's just a dynamically resizing array. But due to doubling of the internal buffer it uses 1.5 times more than the raw storage: 8 Mi key/value pair each 8 bytes (as ints are still 4 bytes) take 64 MiB. Doubling required additionally 32 MiB, thus in total 96 MiB are needed, which matches the plotted spike. The std::deque is slightly slower, but uses only about 64 MiB of raw storage.

Now regard the memory profiles of the map containers: the red-black tree has the highest memory overhead for integer pairs, as two pointers are added in each node (enlarging each node size times three). However, the hash maps also have a high memory overhead. Apparently, both hash map implementations dynamically resize when a fill threshold is reached, requiring temporary memory while reinserting into the new table. Of course, the hash maps are unsorted and allow excepted O(1) access time.

However, the map container with lowest memory overhead (and fastest for insertion time) was STX B+ Tree. It required only about the same amount of memory as a vector, one third of an STL map, or about half of a hash map. This is due to the fact, that the B+ tree contains fewer pointers. Consequentially, there is really no reason not to replace an STL map with the STX B+ Tree for mapping integer keys to pointer or integer values. That includes integer to std::string maps or other indirectly referenced objects. Of course, for larger key/value data types this is may not be true, as here the gain by reducing pointers may not out-weigh the overhead of additionally allocated "empty" slots.

A few questions regarding your malloc count: Are you also considering the overhead of the management datastructures of the allocator. I don't know how todays malloc allocators work, but I'm sure you need at least a pointer, the size of the block and maybe additional bytes to ensure alignment for each allocated memory block. So allocating less objects (esp. if they are small) should also considerably shrink the real memory footprint.

While we are on this topic: My favorite allocator is the "obstack" one from gcc/glibc, it's an arena style allocator and is sometimes considerably faster if you allocate alot of small obejcts though obviouslty it's not a general purpose allocator.

Also a note to the memory consumption of the hashmaps: AFAIK the STL/gnu STL only comes with hashmaps using linked lists to resolve collisions, you might try a hashmap using linear probing like the one from the "google sparse hash" project.